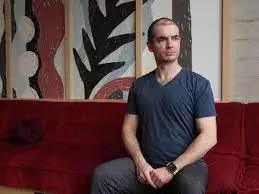

Ilya Sutskever Launches Safe Superintelligence Inc. After Leaving OpenAI

Breakaway AI Venture

Ilya Sutskever, co-founder of OpenAI, has announced the launch of a rival AI startup, Safe Superintelligence (SSI) Inc., just a month after his departure from OpenAI following an unsuccessful coup attempt against its CEO, Sam Altman. Sutskever, one of the most respected figures in AI research, revealed that SSI aims to build “safe superintelligence” and described it as the world’s first dedicated SSI lab focused on a single goal and product.

Sutskever introduced Safe Superintelligence (SSI) Inc on Wednesday. It is promoted as the pioneer straight-forward SSI lab globally, solely focused on creating a safe superintelligence, as stated in an announcement on X.

Founding Team and Vision

SSI has been co-founded by Sutskever along with former OpenAI employee Daniel Levy and AI investor and entrepreneur Daniel Gross. Gross, a former partner at Y Combinator, holds stakes in several companies, including GitHub, Instacart, and AI ventures like Perplexity.ai and Character.ai. Although the investors in SSI have not been disclosed, the founders emphasize that the company’s primary focus is the development of safe superintelligence, free from revenue pressures. This singular focus, they argue, will attract top talent to their initiative.

Strategic Positioning and Comparison to OpenAI

Sutskever and his co-founders highlighted that SSI’s dedicated mission to safe superintelligence means no distractions from management overhead or product cycles. This approach contrasts with OpenAI, which, while initially founded as a not-for-profit research lab, has evolved into a fast-growing business under Altman’s leadership. Despite Altman’s assertions that OpenAI’s core mission remains unchanged, Sutskever’s new venture seeks to stay purely focused on safety and progress without commercial constraints. SSI will have headquarters in both Palo Alto and Tel Aviv.

Background and Recent Turmoil at OpenAI

Sutskever’s departure from OpenAI followed a period of internal conflict over the company’s direction and safety priorities. In November, Sutskever and other directors moved to oust Altman from his CEO position, a decision that shocked investors and staff. Altman returned to his role days later, under a new board, and Sutskever subsequently left the company in May, expressing excitement about his new, personally meaningful project.

Previous Breakaways and Industry Challenges

Sutskever is not the first OpenAI executive to leave and pursue safe AI initiatives. In 2021, Dario Amodei, former head of AI safety at OpenAI, founded Anthropic, which has since raised $4 billion from Amazon and hundreds of millions more from venture capitalists, achieving a valuation of over $18 billion. Another recent departure, Jan Leike, who worked closely with Sutskever, joined Anthropic after expressing concerns that OpenAI’s safety culture was being sidelined in favor of product development.

Check out the other AI news and technology events right here in AIfuturize!